Abstract

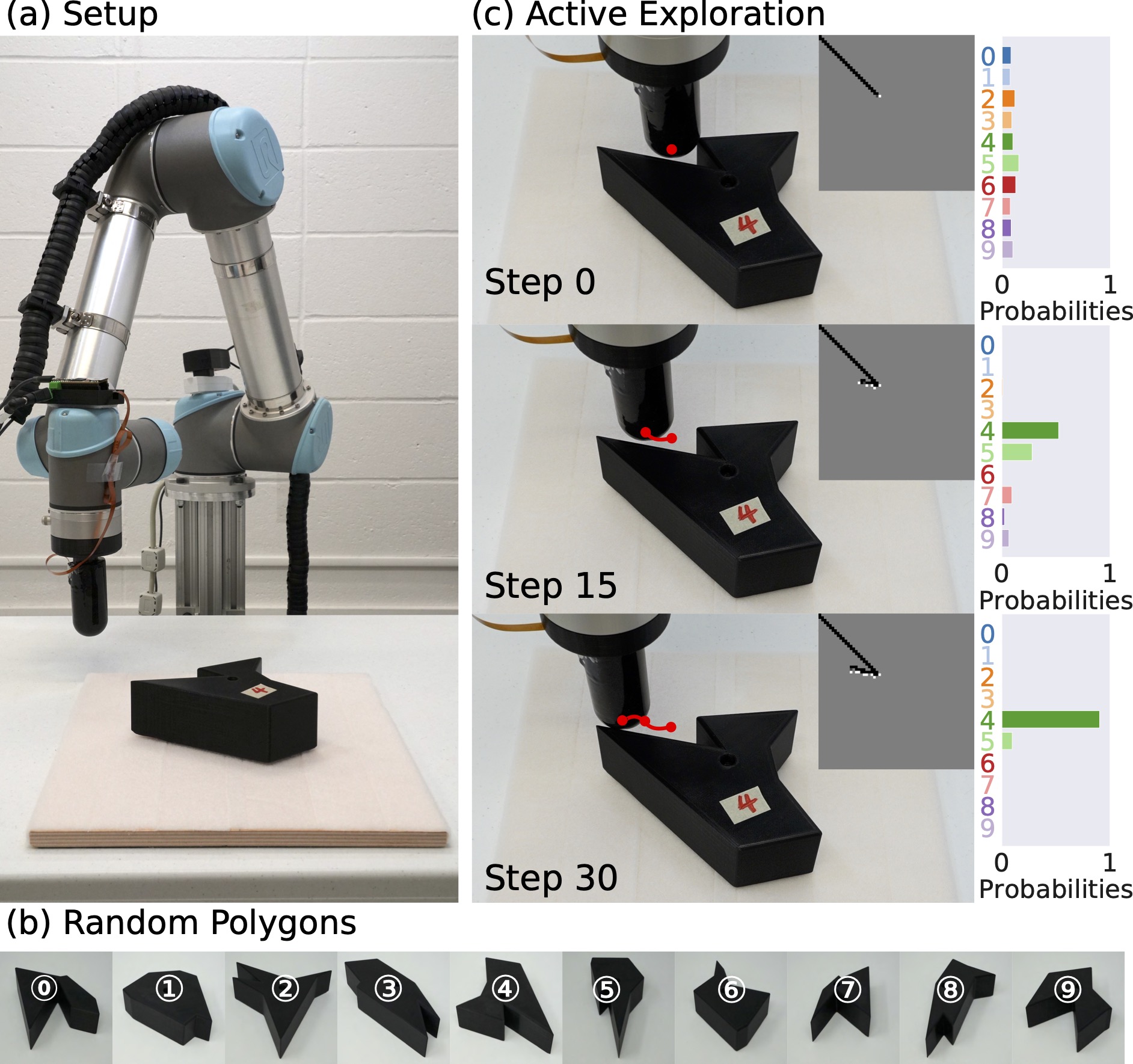

Inspired by the human ability to perform complex manipulation in the

complete absence of vision (like retrieving an object from a pocket),

the robotic manipulation field is motivated to develop new methods for

tactile-based object interaction. However, tactile sensing presents the

challenge of being an active sensing modality: a touch sensor provides

sparse, local data, and must be used in conjunction with effective

exploration strategies in order to collect information. In this work, we

focus on the process of guiding tactile exploration, and its interplay

with task-related decision making. We propose TANDEM

(TActile exploration aNd

DEcision Making), an architecture to learn efficient

exploration strategies in conjunction with decision making. Our approach

is based on separate but co-trained modules for exploration and

discrimination. We demonstrate this method on a tactile object

recognition task, where a robot equipped with a touch sensor must

explore and identify an object from a known set based on binary contact

signals alone. TANDEM achieves higher accuracy with fewer actions than

alternative methods and is also shown to be more robust to sensor noise.

Inspired by the human ability to perform complex manipulation in the

complete absence of vision (like retrieving an object from a pocket),

the robotic manipulation field is motivated to develop new methods for

tactile-based object interaction. However, tactile sensing presents the

challenge of being an active sensing modality: a touch sensor provides

sparse, local data, and must be used in conjunction with effective

exploration strategies in order to collect information. In this work, we

focus on the process of guiding tactile exploration, and its interplay

with task-related decision making. We propose TANDEM

(TActile exploration aNd

DEcision Making), an architecture to learn efficient

exploration strategies in conjunction with decision making. Our approach

is based on separate but co-trained modules for exploration and

discrimination. We demonstrate this method on a tactile object

recognition task, where a robot equipped with a touch sensor must

explore and identify an object from a known set based on binary contact

signals alone. TANDEM achieves higher accuracy with fewer actions than

alternative methods and is also shown to be more robust to sensor noise.

Supplementary Video (1min30sec)

IROS Presentation Video (7min)

Individual Real-world Demonstrations

The first example of each object corresponds to Figure 5 examples in the paper.Object 0

Object 1

Object 2

Object 3

Note: sensor noise before first contact is observed in example 1.

Object 4

Note: sensor noise after contact is observed in example 2.

Object 5

Object 6

Object 7

Note: in example 2, our method almost converges on object 1 but then it takes advantage of the non-collision space and quickly converges on object 7.

Object 8

Note: sensor noise before first contact is observed in example 1.

Object 9

Note: sensor noise after contact is observed in example 2.

BibTex

@article{xu2022tandem,

title={TANDEM: Learning Joint Exploration and Decision Making with Tactile Sensors},

author={Xu, Jingxi and Song, Shuran and Ciocarlie, Matei},

journal={IEEE Robotics and Automation Letters},

year={2022},

publisher={IEEE}

}Acknowledgment

This material is based upon work supported by the National Science Foundation under Award No. CMMI-2037101. Any opinions, findings and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the National Science Foundation.