Abstract

Tactile recognition of 3D objects remains a challenging task. Compared

to 2D shapes, the complex geometry of 3D surfaces requires richer

tactile signals, more dexterous actions, and more advanced encoding

techniques. In this work, we propose TANDEM3D, a method that applies a

co-training framework for exploration and decision making to 3D object

recognition with tactile signals. Starting with our previous work, which

introduced a co-training paradigm for 2D recognition problems, we

introduce a number of advances that enable us to scale up to 3D.

TANDEM3D is based on a novel encoder that builds 3D object

representation from contact positions and normals using PointNet++.

Furthermore, by enabling 6DOF movement, TANDEM3D explores and collects

discriminative touch information with high efficiency. Our method is

trained entirely in simulation and validated with real-world

experiments. Compared to state-of-the-art baselines, TANDEM3D achieves

higher accuracy and a lower number of actions in recognizing 3D objects

and is also shown to be more robust to different types and amounts of

sensor noise.

Tactile recognition of 3D objects remains a challenging task. Compared

to 2D shapes, the complex geometry of 3D surfaces requires richer

tactile signals, more dexterous actions, and more advanced encoding

techniques. In this work, we propose TANDEM3D, a method that applies a

co-training framework for exploration and decision making to 3D object

recognition with tactile signals. Starting with our previous work, which

introduced a co-training paradigm for 2D recognition problems, we

introduce a number of advances that enable us to scale up to 3D.

TANDEM3D is based on a novel encoder that builds 3D object

representation from contact positions and normals using PointNet++.

Furthermore, by enabling 6DOF movement, TANDEM3D explores and collects

discriminative touch information with high efficiency. Our method is

trained entirely in simulation and validated with real-world

experiments. Compared to state-of-the-art baselines, TANDEM3D achieves

higher accuracy and a lower number of actions in recognizing 3D objects

and is also shown to be more robust to different types and amounts of

sensor noise.

Supplementary Video (2min20sec)

Individual Real-world Demonstrations

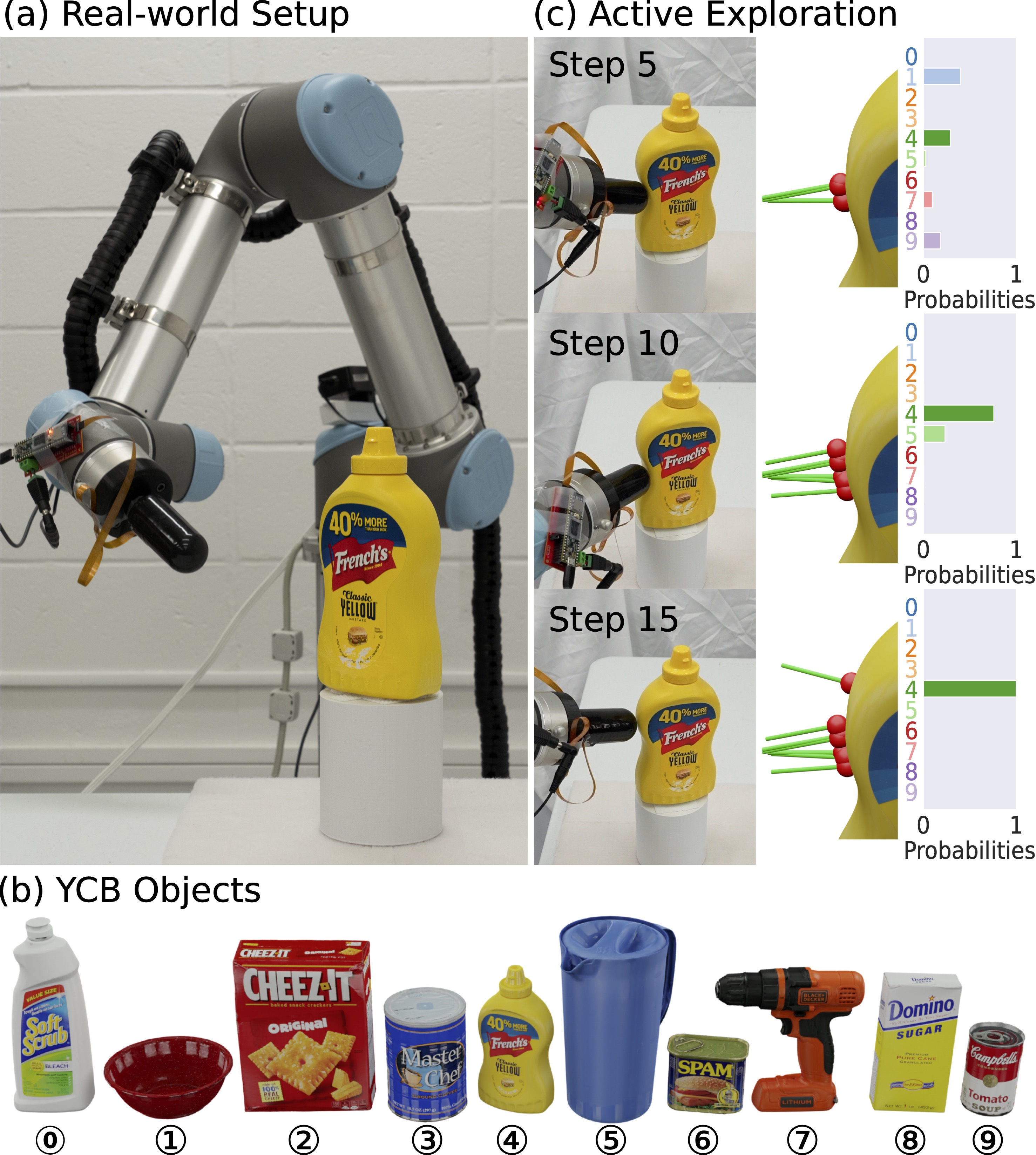

These real-world demonstrations of each object correspond to examples of Figure 5 in the paper. Notable behaviors:

- Object 0: the finger moves to the back of the object and contacts the recess, which is a distinguishable geometry special to object 0.

- Object 1: with only 9 actions, a correct prediction is made through a contact from beneath the bowl rim.

- Object 5: the finger moves up towards the pitcher handle and then makes a decision by making a small angle adjustment to contact the handle using the side of the finger.

- Object 7: the finger swings up to contact from beneath the drill and immediately makes the correct prediction.

- Object 8: the finger moves all the way up and makes a correct prediction through a top-down contact.

- Object 9: with only 11 actions, a correct prediction is made through a contact on the top surface edge of the can.

Object 0 (Bleach Cleanser)

Object 1 (Bowl)

Object 2 (Cracker Box)

Object 3 (Master Chef Can)

Object 4 (Mustard Bottle)

Object 5 (Pitcher Base)

Object 6 (Potted Meat Can)

Object 7 (Power Drill)

Object 8 (Sugar Box)

Object 9 (Tomato Soup Can)

BibTex

@article{xu2022tandem3d,

title={TANDEM3D: Active Tactile Exploration for 3D Object Recognition},

author={Xu, Jingxi and Lin, Han and Song, Shuran and Ciocarlie, Matei},

journal={arXiv preprint arXiv:2209.08772},

year={2022}

}Acknowledgment

This material is based upon work supported by the National Science Foundation under Award No. CMMI-2037101. Any opinions, findings and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the National Science Foundation.